Predictive modeling in the realm of data science involves not just algorithms, but a meticulous understanding of data and the art of feature engineering. In this guide, we will explore the intricate world of enhancing predictive modeling with Scikit-Learn pipelines. Whether you’re a student venturing into the world of machine learning or a professional seeking to bolster your skills, this guide will equip you with essential knowledge.

Understanding the Power of Feature Engineering

At the heart of predictive modeling lies feature engineering. It’s not merely about selecting data; it’s about transforming raw information into meaningful insights. Imagine tackling a spam email classification task. By converting raw text data into features like word frequency or presence of specific keywords, machine learning algorithms gain the ability to distinguish between spam and non-spam emails accurately. This process is the essence of feature engineering.While large deep learning models can usually learn this patterns on their own, more lightweight models might need some more help.

Examples of Feature Engineering

- Text Data: Extract features such as word length, existence of certain keywords, word frequencies, etc.

- Datetime Data: Extract year, month, day, and hour from timestamps to recognize temporal patterns. Additionally, you can categorize dates by day of the week, weekend vs weekday, morning vs. afternoon, holidays, among others.

- Geographical Data: Integrate external demographic data and merge geographical and datetime data to reveal correlations, like weather impact on incidents.

Integrating Feature Engineering into Scikit-Learn Pipelines

Pipelines in Scikit-Learn are not just convenient; they are indispensable. They provide a systematic approach to data processing, ensuring uniformity and guarding against data leakage.

Data leakage can significantly impact the reliability of your model. Pipelines act as shields, guaranteeing that preprocessing steps, including feature engineering, are applied consistently during both training and testing. For instance, when computing aggregate statistics, pipelines ensure calculations are based solely on the training data, enhancing the model’s credibility.

Cross-validation is a potent technique to evaluate a model’s performance. When preprocessing steps are integrated into pipelines, they adapt for each fold of cross-validation. This variation exposes the model to a more diverse dataset that can enhance its robustness against unseen data.

Practical Application: Predicting IT Service Ticket Durations

Let’s delve into a real-world project to grasp the practical implications of these techniques. Our challenge was predicting the duration between ticket updates (‘sys_update_at’) and their closing time (‘closed_at’) in an IT service setting. Each unique incident ticket was denoted by an identifier ‘number’. Each row with the same number represents a certain update to that ticket. There are columns that represent information that are only uncovered through investigation sometime in the middle of the ticket’s lifespan. However, in our dataset, this information is propagated to all rows of that incident ticket. Given that we do not have the specifics about which stage were these columns should’ve been first available, we will be excluding these columns.

Exploratory Data Analysis

The following are the findings of our brief exploratory data analysis:

“New” and “Active” ticket states have more than 30,000 rows each. Note that this counts update instances with that state, and not just unique ticket numbers. Tickets can cycle between states throughout its life cycle.

While very few, tickets that are Awaiting Evidence and Awaiting Vendor has a very huge range of values to the days before they were finally closed. This is expected as these states are usually out of the hands of the service team. It is noteworthy that once a ticket is “Resolved” it will be closed in about 5 days. The variation is too small to be visible. This implies some rule-based closing.

Ticket numbers with outlier durations were removed. See below that “Awaiting Vendor” state has been less varied, and “Awaiting Evidence” state has been completely removed.

When splitting using Ticket priority, it can be seen that higher priority tickets get closed faster, but not by much. This might imply that higher priority tickets are also more complex so everything roughly evens out the a median of about 9 days.

We will also be excluding tickets that never reached the ‘Resolved’ state. For the final dataset, the ‘Closed’ states of the tickets were also removed as there is no longer a need to predict at that stage. Our final dataset contains about 19,200 unique incident tickets, down from the original 20,700.

Building the Predictive Model

Constructing a predictive model involves a series of systematic steps, and Scikit-Learn pipelines simplify this process.

- Datetime Feature Extraction: Dissected date and time variables for nuanced temporal insights.

- Target Encoding: Enhanced the model’s understanding of categorical data.

- Handling Missing Data: Imputed missing values intelligently using the ‘most_frequent’ strategy.

- Feature Selection: Variance Threshold ensured the model focused on significant features, enhancing prediction accuracy.

- Model Selection: XGBoost Regressor, a robust gradient boosting algorithm, was employed for its adaptability and efficiency.

Transformer Implementation

The code below shows how the feature engineering steps were implemented in Scikit-learn. For the target encoding, we encode categories by the mean of the target variable for that category. See that we only compute the mean during the “fit” step. That value is saved, and when we “transform” the test data, we just use the pre-calculated values from the training set.

class SplitDateTimeTransformer(BaseEstimator, TransformerMixin):

def fit(self, X, y=None):

return self

def transform(self, X):

X_transformed = X.copy()

cols = X_transformed.select_dtypes(include=['datetime64']).columns.to_list()

for col in cols:

for component in ['year', 'month', 'day', 'hour', 'minute', 'second']:

new_col_name = f'{col}_{component}'

X_transformed[new_col_name] = X_transformed[col].dt.__getattribute__(component).astype(float)

X_transformed.drop(columns=cols, inplace=True)

return X_transformed# Custom transformer for target encoding

class TargetEncoder(BaseEstimator, TransformerMixin):

def __init__(self):

pass

def fit(self, X, y=None):

cols = X.columns.to_list()

temp_df = pd.concat([X, y], axis=1)

category_means_ = []

for col in cols:

category_means_.append(temp_df.groupby(col)[y.name].mean().to_dict())

self.category_means_ = category_means_

return self

def transform(self, X):

X_encoded = X.copy()

cols = X_encoded.columns.to_list()

for i, col in enumerate(cols):

X_encoded[col] = X[col].map(self.category_means_[i])

return X_encodedEvaluating Model Performance

To split the data between training and testing, we sorted it via creation date and ticket number. The older tickets will be used to predict newer tickets. No rows with the same ‘number’ will be split between the two. A baseline “naive” model is constructed by randomly picking values from a Poisson distribution with the same mean as the training data’s target column. This tries to mimic the distribution of values from the training set. We then measure its performance against the actual values by measuring the Root Mean Square Error and Mean Absolute Error.

Baseline Model:

RMSE: 5.33 days

MAE: 4.31 days

For the pipeline, we used random search to try out various combinations of hyperparameters that will yield the best results. You can see that we implemented a Group K-Fold. While the actual ‘number’ columns are not used by the XGBoost Regressor it is used to ensure that rows from the same ticket ‘number’ are not separated during cross validation.

Fine-Tuned Model:

RMSE: 3.63 days

MAE: 2.38 days

Using the trained pipeline cuts the average error by about half. If we compute the MAE per state, it goes even lower.

Read More

Build an Automated ETL Pipeline. From setting up Docker to utilizing APIs and automating workflows with GitHub Actions, this post goes through it all.

Unlocking Data Science: Your Easy Docker Setup Guide

Ready to dive into data science? Learn how to set up your development environment using Docker for a seamless and reproducible workspace. Say goodbye to compatibility issues and hello to data science success!

In this short project, I scraped all of Lebron's regular season points and plotted them in an interactive graph.

Predicting a Fitness Center’s Class Attendance with Machine Learning

In this project I analyzed a fitness center's attendance data to predict attendance rates of its group classes.

A marketing agency presents a promotional plan to a telecommunication company to increase its subscriber base and generate revenue. Using population and location data, we estimated the feasibility of this plan in this case study.

The tweet that started the trend was posted on June 12 (Philippine Independence day). On that tweet, the author stated that he dined in at a Tropical Hut branch, and he is their only customer. Despite the pandemic “restrictions”, commercial activity on malls and fast food chains are pretty much back to “normal”, so having only one customer is disheartening.

In this project I trained a transformer model to recognize words from audio.

In this article, I fine-tuned a pre-trained object detection model using a small custom dataset.

Fast and Free Deep Learning Demo with Gradio

In this article, we will build a simple deep learning based demo application that can be accessed publicly in Hugging Face spaces

The rate at which unreliable news was spread online in the recent years was unprecedented. In this project, I finetuned some language models to make an unreliable news classifier.

In this article, we will discuss how to quickly showcase your model publicly as a machine learning application for free.

In this article, I will discuss a way of accessing Google Cloud GPUs to train your Deep Learning projects.

Code This case study is my capstone project for the Google Data Analytics Certification offered through Coursera. I was given access to …

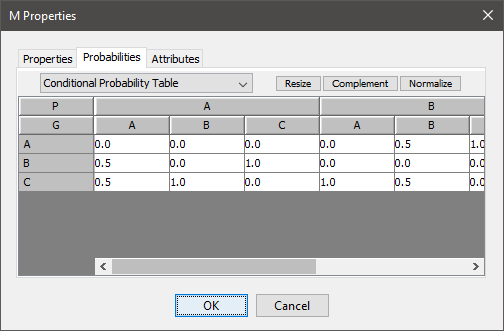

However, to make it a little bit more scalable, the tables are defined in a separate Google sheet, and imported into the Google Colab notebook. Each node is a separate worksheet, and the columns list the parent nodes, the name of the node, and probability.

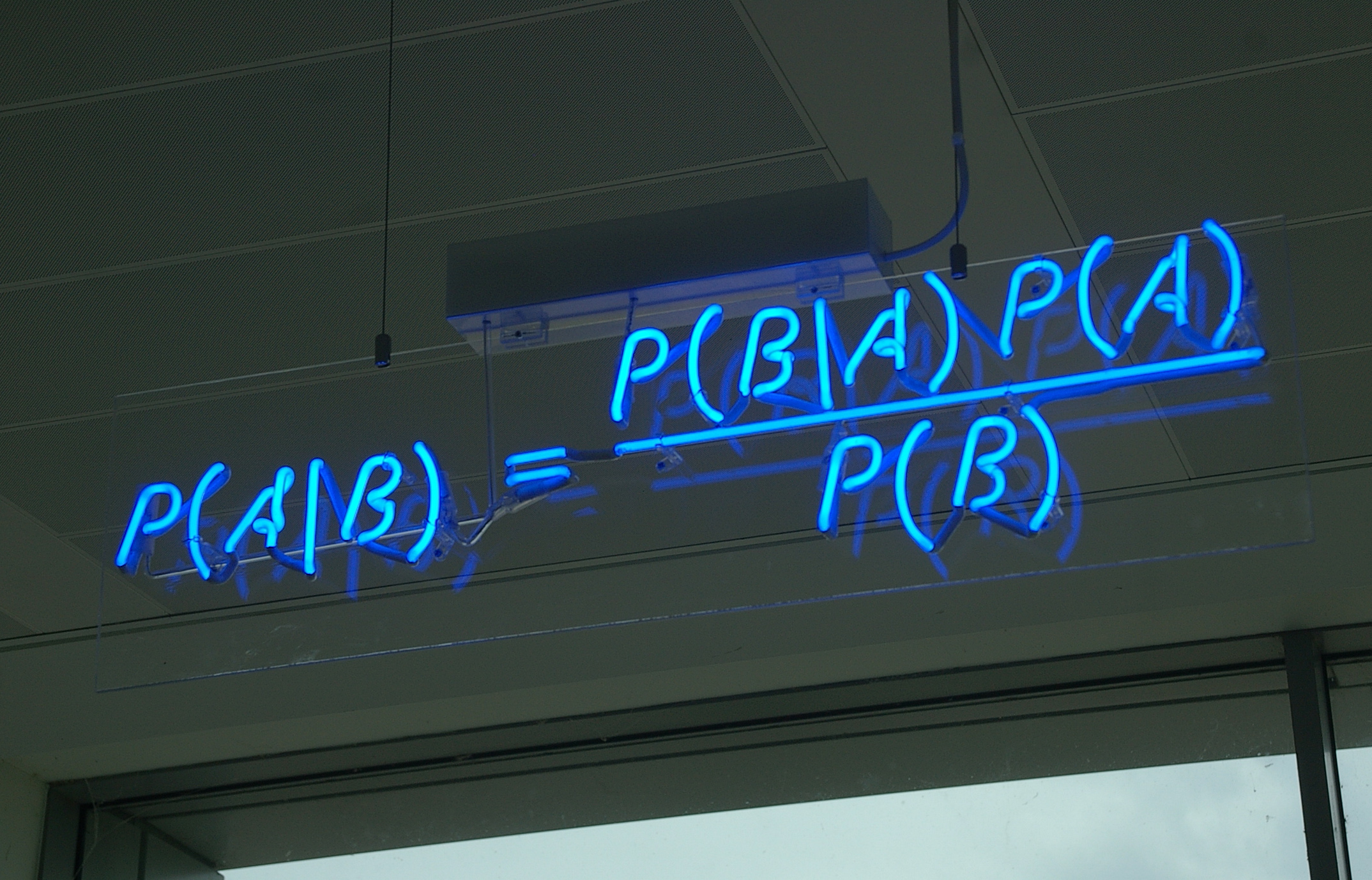

Bayesian Networks are a compact graphical representation of how random variables depend on each other. It can be used to demonstrate the …

In this article we will break down what the Fourier Transform does to a signal, then we will be using Python to compute and visualize the transforms of different waveforms.

Conclusion

In this post, we have discussed the power of feature engineering, the significance of pipelines, and witnessed their application in a real-world scenario. Mastery of predictive modeling is not only reliant on choosing models and writing code. It is also important that we learn how to make the most out of our data. While a non-exhaustive list of ideas were presented above, a lot of these will come down to domain knowledge, experience, and experimentation.