Bayesian Networks are a compact graphical representation of how random variables depend on each other. It can be used to demonstrate the probability of one variable occurring depending on which related variables have been observed. In this previous blog post, we have used a simulation tool to construct and test our network. In this post, we would be covering the same example using Pomegranate, a Python package that implements fast and flexible probabilistic models ranging from individual probability distributions to compositional models such as Bayesian networks and hidden Markov models.

The problem that we modeled goes as follows:

In a gameshow, there are three doors, and behind only one of those doors is a prize. The guest picks the door that they think will lead to the prize. The host (Monty), knowing the location of the prize, will open one of the two remaining doors. This door will always be empty. The host will then ask the guest if they want to change their door or stick with their initial choice. What is the probability that the guest picked the correct door?

For a simple problem such as this, we could define the conditional probability tables in the code. However, to make it a little bit more scalable, the tables are defined in a separate Google sheet, and imported into the Google Colab notebook. Each node is a separate worksheet, and the columns list the parent nodes, the name of the node, and probability. The table below is a snippet of the sheet for the ‘monty’ node.

| guest | prize | monty | probability |

| A | A | A | 0 |

| A | A | B | 0.5 |

| A | A | C | 0.5 |

| A | B | A | 0 |

| A | B | B | 0 |

Constructing the Nodes

For the root nodes that are defined by Uniform Probability Distributions, we use the DiscreteDistribution class. It needs a dictionary with the events as ‘keys’, and the probabilities as ‘values’. The code snippet below is processing the data from our spreadsheet, into a Pandas Dataframe, then to a dictionary.

This spreadsheet import method shines when we are constructing children nodes as the list of combinations for their conditional probability tables can get large. The ConditionalProbabilityTable class takes in a list of lists, where each item is a combination of values by the parent nodes, the output value of this node, and the probability. It also needs a second parameter where you pass a list of parent nodes as objects. In our case we pass the initialized DiscreteDistribution objects from earlier. Take note that the order of the objects on the second parameter must align with the order in which they are specified in our spreadsheet.

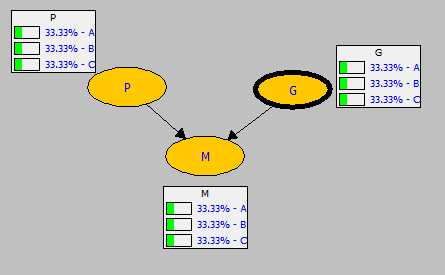

To fully realize the distributions as Bayesian Network nodes, we use the Node class. Next, we initialize the model by using the BayesianNetwork class. After that, we include the nodes that we created earlier by using the add_states() method. Then we connect the nodes by using the add_transitions() method. Remember that the parent node goes first before the child node. Finally, we use the bake() method to finalize our model.

If you have changed the probability values from the spreadsheet, you must re-run this whole section of code before testing.

Testing the Network's Beliefs

To test the behavior of the network, we use the predict_proba() method. It takes in a dictionary where the ‘key’ is the name of the node that you want to be observe, and the ‘value’ is the observation. If you print the methods return value, it will give you the nodes and their resulting probabilities. Again, we set that the guest picks door A, and the host opens door B. The probability that the prize is behind the remaining door C, is 66%.

“parameters” : [ {

“A” : 0.3333333333333334,

“B” : 0.0,

“C” : 0.6666666666666664

} ]

And with that, you have modeled the Monty Hall problem in Python using pomegranate. The result can be very non-intuitive to a lot of people (including me, when I first saw this problem). One explanation that I found very useful is this: In the beginning, the guest has 1/3 chance of picking the door with the prize and a 2/3 chance that prize is somewhere else. Monty opening a door that is always empty doesn’t change that probability. So with two doors remaining, there is still a 2/3 chance that the prize is behind the non-chosen door.